I just had an interesting discussion on Raspberry Pi and RavenDB over twitter. The 140 characters limit is a PITA when you try to express complex topics, so I thought that I would do better job explaining it here.

I just had an interesting discussion on Raspberry Pi and RavenDB over twitter. The 140 characters limit is a PITA when you try to express complex topics, so I thought that I would do better job explaining it here.

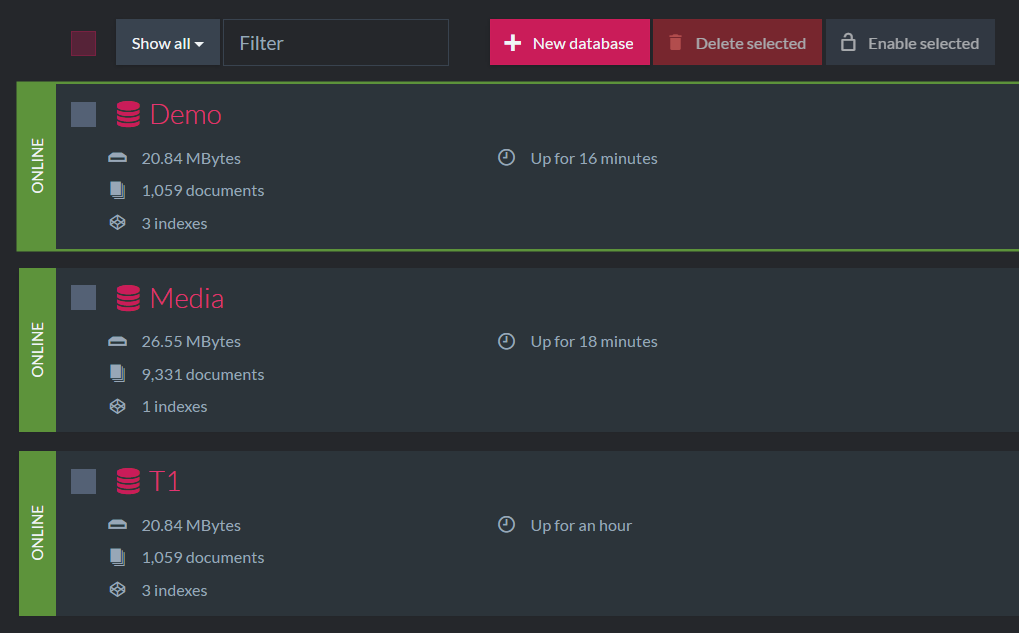

RavenDB is running on the Raspberry Pi and we routinely use it internally for development and demonstrations purposes. That seems to have run into some objections, in particular: “Looks like a toy and shows no real value and can also show poor performance and make uneducated people concerned” and “Nobody is going to deploy a database on a machine with quad cores and lack of RAM and the kind of poor IO a Pi has.”

In fact, I wish that I had the ability to purchase a few dozens Raspberry Pi Zero, since that would bring the per unit cost significantly. But why bother?

Let us start with the things that I think we all agree on, the Pi isn’t a suitable production machine. Well, sort off. If you don’t have any high performance requirements, it is a perfectly suitable machine, we have one that is used to show the build status and various metrics, and it is a production machine (in the sense that if it breaks, it needs fixing), but that is twisting things around.

We have two primary use cases for RavenDB on Raspberry Pi. Internal testing and external demos. Let examine each scenario in turn.

Internal testing most refers to having developers multiple Pis on their desktop, participating in a cluster, and serving as physically remote endpoints. We could likely handle that with a container / vm easily enough, but it is much nicer to have a separate machine (that gets knocked down to the floor, have its network cable unplugged, etc for certain kind of experiments. It also provide us with assurance that we are actually hitting real network and deal with actual hardware. A nice “problem” that we have with the Pi is that it is pretty slow on IO, especially since we typically run it on an internal SD card, so it give us a slightly slower system overall, and prevent the usual fallacies of distributed computing from taking hold. One of our Pis would crash when used under load, and it was an interesting demonstration of how to deal with a flaky node (the problem was that we used the wrong power supply, if you care).

Using the Pis as semi realistic nodes help us avoid certain common mistaken assumptions, but it is by no mean replacing actual structural testing of those (Jepsen for certain parts, actual tests with various simulated failures). Another important factor here is that the Pi is cool. It is really funny to see how some people in the office threat their Pis. And it make for a nicer work environment than just running a bunch of VMs.

Running on much slower hardware has also enabled us to find and resolve a few bottlenecks much earlier (and cheaper) in the process, which is always nice. But more importantly, it gives us a consistent low end machine that we can try on and verify that even on something like that, our minimum SLA is met.

So that is it in terms of the internal stuff, but I’ll have to admit that those mostly were born out as a result of our desire to be able to demo RavenDB on a Raspberry PI.

You might reasonably ask, why on earth would you want to demo your product on a machine that is literally decades behind the curve (in 1999, Dell PowerEdge 6450 had 4 PIII Xeon 550 CPUs and 8 GB RAM and sold for close to 20,000$).

It circles back again to the Raspberry Pi being cool, but a lot of that has to do with the environment in which we give the demos. Most of our demos are given at a booth in a conference. In those cases, we have 5 – 10 minutes to give a complete demo of RavenDB. This is obviously impossible, so what we are actually doing is giving a demo of the highlights, and provide enough information that people will go and seek out more on their own.

That means that we have our sexiest features upfront and center. And one of those feature is RavenDB’s clustering. A lot of the people we meet in conferences have very little to no experience with NoSQL, and when they do have some experience, it is often mixed.

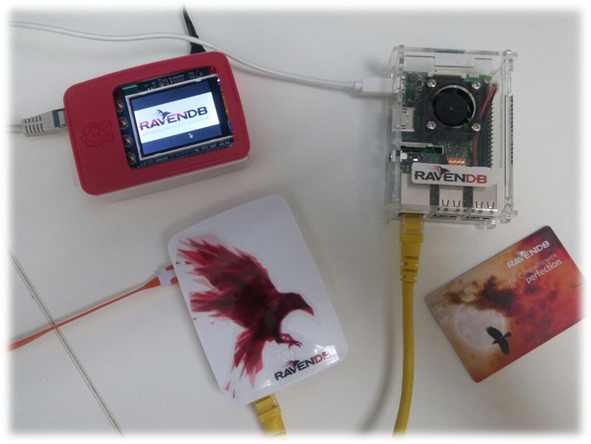

A really cool demo that we do is the “time to cluster”, in which we are asking one of the people listening to pull out a phone and setup a timer, and see how much time it takes us to build a multi master cluster with full failover capabilities. (Our current record, if anyone wants to know, is about 37 seconds, but we have a bug report to stream line this). Once that is setup, we can show how RavenDB works in the presence of failures and network issues.

We could do all of that virtually, using firewall / iptables or something like that, but people accustomed with “setting up a cluster takes at least a week or two” are already suspicious that we couldn’t possibly make this work. Having them physically disconnect the server from the network and seeing how it behaves give a much better demo experience, and it allows us to bypass a lot of the complexities involved in explaining some of the concepts around distributed computing.

One of the most common issue, by the way, is the notion of a partition. It is relatively easy to explain to a user that a server may go down, but some people had hard time realizing that a node can be up, but unable to connect to some of the network. When you are actually setting up the wires so they can actually see it, you can see a light bulb moment going on.

A cool demo might sound like it isn’t important, but it generates a lot of interest and people really like it. Because the Raspberry Pi isn’t a 20,000$ machine, we have taken to taking a few of them with us to every conference, and just raffling them off by the end of the conference. The very fact that we treat the Raspberry Pi as a toy make this very attractive for us.

As for people assuming that the performance on the PI is the actual performance on real hardware… We haven’t run into those yet, nor do I believe that we are likely to. To be frank, we are fast enough that this isn’t really an issue unless you are purposefully trying to load test the system. Hell, I have production systems right now that are I can handle with a trio of Pis without breaking a sweat. At one point, we had a customer visit where we literally left them with a Raspberry Pi as their development server because it was the simplest way to get them started. I don’t expect to be shipping a lot of RavenDB Development “Servers” in the future, but it was fun to do.

All in all, I think that this has been a great investment for us, and we expect to see the Pis generate interest (and the RavenDB itself keeping it) whenever we show it.

If you are around NDC London, we have a few guys there in a booth right now, and they’ll raffle a few Raspberry PIs by the end of the week.